How to Use Whisper API to Transcribe Videos (Python Tutorial)

Contents

In recent years, Artificial Intelligence (AI) has made significant advancements and has become increasingly accessible. Many AI functionalities can be implemented using APIs, which allow developers to integrate AI into their applications easily.

One such API is the Whisper API, which is used for transcribing audio into text. This is particularly useful for video editing and content creation applications, as it allows developers to add captions or subtitles generation functionality to their applications without having to train the AI model themselves.

In this tutorial, we’ll learn how to use the Whisper API to transcribe this video. We’ll also look at other options, including one that can automate the process of transcribing video and adding subtitles, producing the result below:

What Does Transcribe Mean

To transcribe means to convert audio or video content into written text. It could be done manually by a person, or automatically using an application. Transcribing an audio or video into text can make the content accessible to a wider audience. For example, the transcribed text can be helpful in adding subtitles to video, ensuring that everyone regardless of their hearing ability and language proficiency, can understand the content.

Besides that, some people prefer reading to listening as a mode of learning. Generating transcripts for podcasts or interviews allows them to quickly skim or search through the content without having to listen to the entire audio.

What is Whisper API?

Whisper API is offered by OpenAI for users to access their speech-to-text model, Whisper. The AI model has been trained on 680,000 hours of multilingual and multitask supervised data collected from the web. It is capable of transcribing in up to 60 languages, including English, Chinese, Arabic, Hindi, and French.

Besides transcribing the content into its original language, Whisper API can also transcribe it into English. This helps content in any language to reach a global audience and saves time and money compared to manual translation.

🐻 Bear Tips: Whisper API currently supports files up to 25 MB in various formats, including

m4a,mp3,mp4,mpeg,mpga,wav, andwebm.

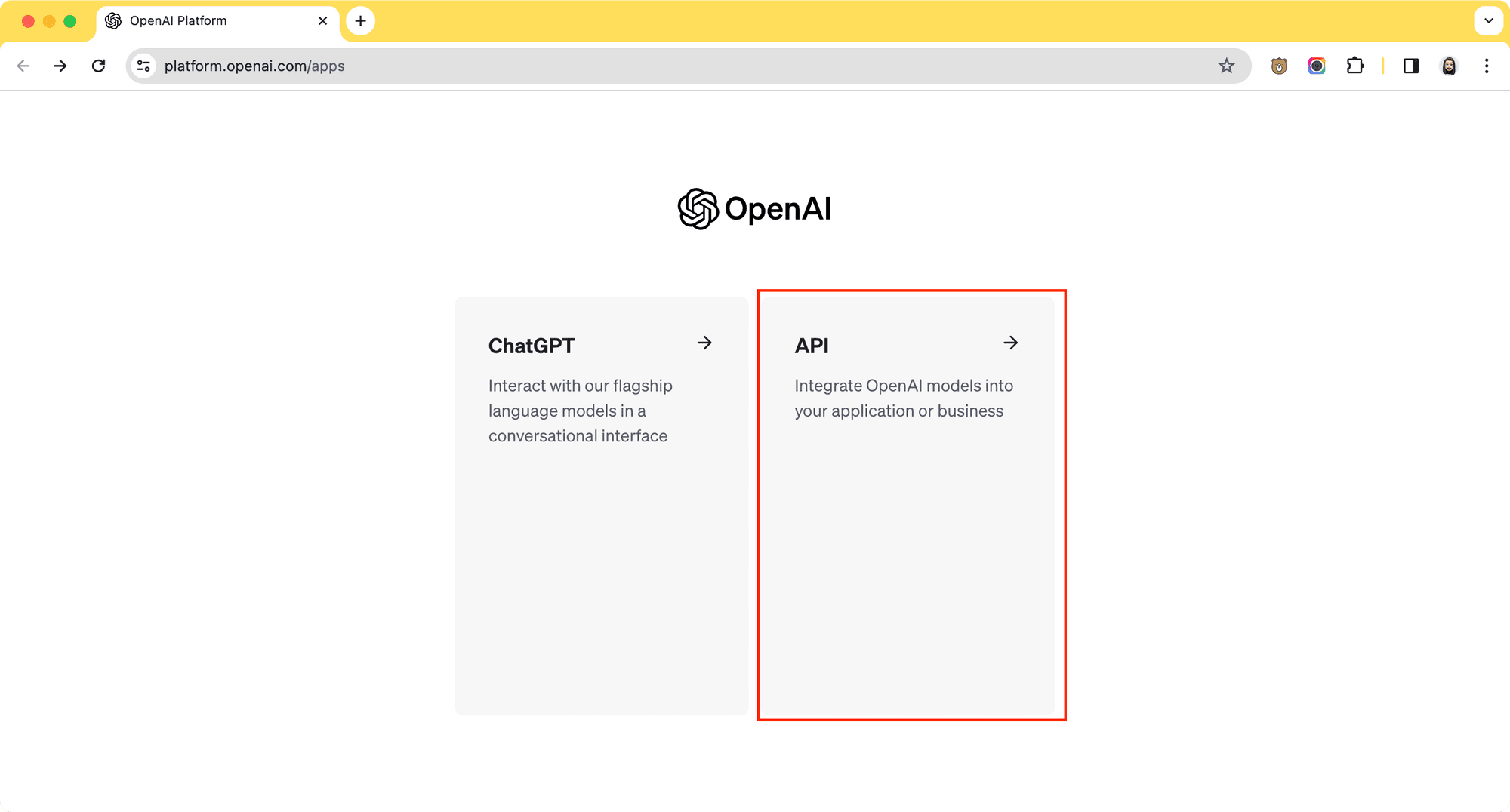

Getting the OpenAI API Key

To use the Whisper API, you will need an OpenAI API key. Follow these steps to obtain one:

Sign up for an OpenAI account and log in to the API dashboard.

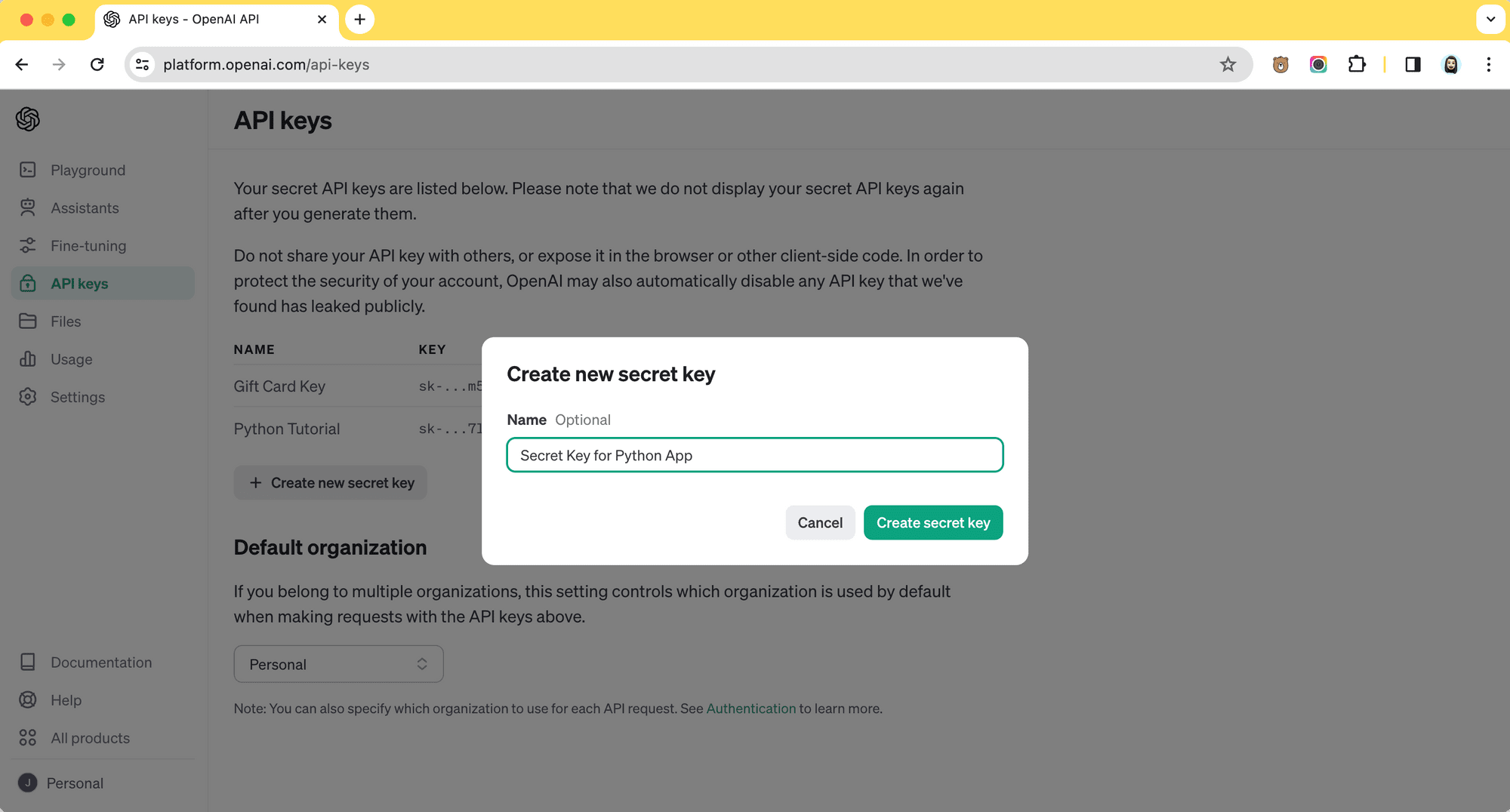

Then, go to the “API Keys” tab and click “Create new secret key” to create a new secret/API key.

Copy the key and save it in a secure location for later use in your code.

Transcribing Videos Using Whisper API

Currently, the Whisper API only supports audio files. For transcribing videos, you have two options:

- Convert your video file into an audio file in one of the supported formats manually, or

- Use a Python library to extract audio from your video file and pass it to the Whisper API

You can convert the video manually and follow the official Whisper API documentation to transcribe the audio file. Otherwise, you can follow this tutorial to transcribe the video directly in Python.

In your development environment, create a new Python file (eg. script.py). Then, follow the instructions below:

Step 1. Install and Import the OpenAI and MoviePy Libraries

In your terminal/command line, run the command below to install the OpenAI and MoviePy libraries_:_

pip install openai moviepy

Note: If you’re using Python 3.x, replace pip in the command above with pip3.

Then, import the libraries in your Python file and create a new instance of OpenAI:

from openai import OpenAI

from moviepy.editor import VideoFileClip

client = OpenAI(api_key="your_openai_api_key")

Step 2. Extract the Audio from the Video File

Add the code below to extract the audio from the video file and save it as an output:

video = VideoFileClip("video.mp4")

audio = video.audio

audio.write_audiofile("output_audio.mp3")

Step 3. Transcribe the Audio File

You can now open the audio file using Python’s built-in open() function and pass it to the Whisper API to transcribe it into text:

audio_file= open("output_audio.mp3", "rb")

transcript = client.audio.transcriptions.create(

model="whisper-1",

file=audio_file

)

print(transcript.text)

# GPT-4 is the latest AI system from OpenAI, the lab that created Dolly and ChatGPT. GPT-4 is a breakthrough in problem-solving capabilities. For example, you can ask it how you would clean the inside of a tank filled with piranhas, and it'll give you something useful. It can also read, analyze, or generate up to 25,000 words of text. It can write code in all major programming languages. It understands images as input and can reason with them in sophisticated ways. Most importantly, after we created GPT-4, we spent months making it safer and more aligned with how you want to use it. The methods we've developed to continuously improve GPT-4 will help us as we work towards AI systems that will empower us all.

🐻 Bear Tips: If your audio file is larger than 25MB, split it into chunks of ≤ 25 MB and transcribe them separately.

Improving the Accuracy of Transcription Using Prompts

In the first sentence of the transcription result above, there is a mistake: “GPT-4 is the latest AI system from OpenAI, the lab that created Dolly and ChatGPT”. The word “DALL·E” is misrecognized as “Dolly”.

To enhance the accuracy of the transcription, you can use the prompt parameter to provide additional context. For example:

transcript = client.audio.transcriptions.create(

model="whisper-1",

file=audio_file,

prompt="The audio could include names like DALL·E, GPT-3, and GPT-4."

)

As a result, “DALL·E” in the first sentence is transcribed correctly:

print(transcript.text)

# GPT-4 is the latest AI system from OpenAI, the lab that created DALL·E and ChatGPT. GPT-4 is a breakthrough in problem-solving capabilities. For example, you can ask it how you would clean the inside of a tank filled with piranhas, and it'll give you something useful. It can also read, analyze, or generate up to 25,000 words of text. It can write code in all major programming languages. It understands images as input and can reason with them in sophisticated ways. Most importantly, after we created GPT-4, we spent months making it safer and more aligned with how you want to use it. The methods we've developed to continuously improve GPT-4 will help us as we work towards AI systems that will empower us all.

Whisper API Alternatives

With the variety of languages and audio formats supported, as well as the customizability of the Whisper API, it is a powerful tool for developers to add the transcribing capability to their applications. However, if you prefer alternatives, there are other options available:

Amazon Transcribe

Amazon Transcribe is another service that provides transcription capabilities. Like Whisper API, it allows you to add speech-to-text capability to your applications. Furthermore, Amazon Transcribe offers specialized transcription extended from its basic transcription service:

- Amazon Transcribe Call Analytics - This API uses models that are trained to specifically understand customer service and sales calls. It can generate highly accurate call transcripts and extract conversation insights to help improve customer experience.

- Amazon Transcribe Medical - It specializes in transcribing medical terminologies such as medicine names, procedures, conditions, and diseases that are difficult to transcribe using other general speech-to-text services. It can transcribe conversations between doctors and patients for documentation purposes, add subtitles for telehealth consultations, and more.

- Amazon Transcribe Toxicity Detection - Amazon Transcribe Toxicity Detection is a voice-based toxicity detection tool that can identify and classify toxic language. It can detect hate speech, harassment, and threats from not only words but also the speech’s tone and pitch.

Google Cloud Speech-to-Text

Google Cloud Speech-to-Text is another speech-to-text API that uses advanced AI models, and it supports over 125 languages! You can select domain-specific transcription models based on your application's industry, like phone_call, medical_dictation, medical_conversation, video, and more.

Like Whisper API, you can improve the accuracy of the transcription results by specifying words and/or phrases that are special, rare, or where the audio contains noise. It is called model adaptation.

You can also protect your audio data when using Google Cloud Speech-to-Text. Despite being one of the Google Cloud products, you have the flexibility to use the Cloud version or deploy it on your infrastructure to protect the data.

🐻 Bear Tips: Not all models support model adaptation. You can look for more details from the official documentation.

Bannerbear

If you're looking to transcribe videos and add subtitles, Bannerbear is a useful tool. Different from other transcription APIs introduced above, Bannerbear is a media (images and videos) generation API that can help you automate the process of transcribing videos and adding customizable subtitles.

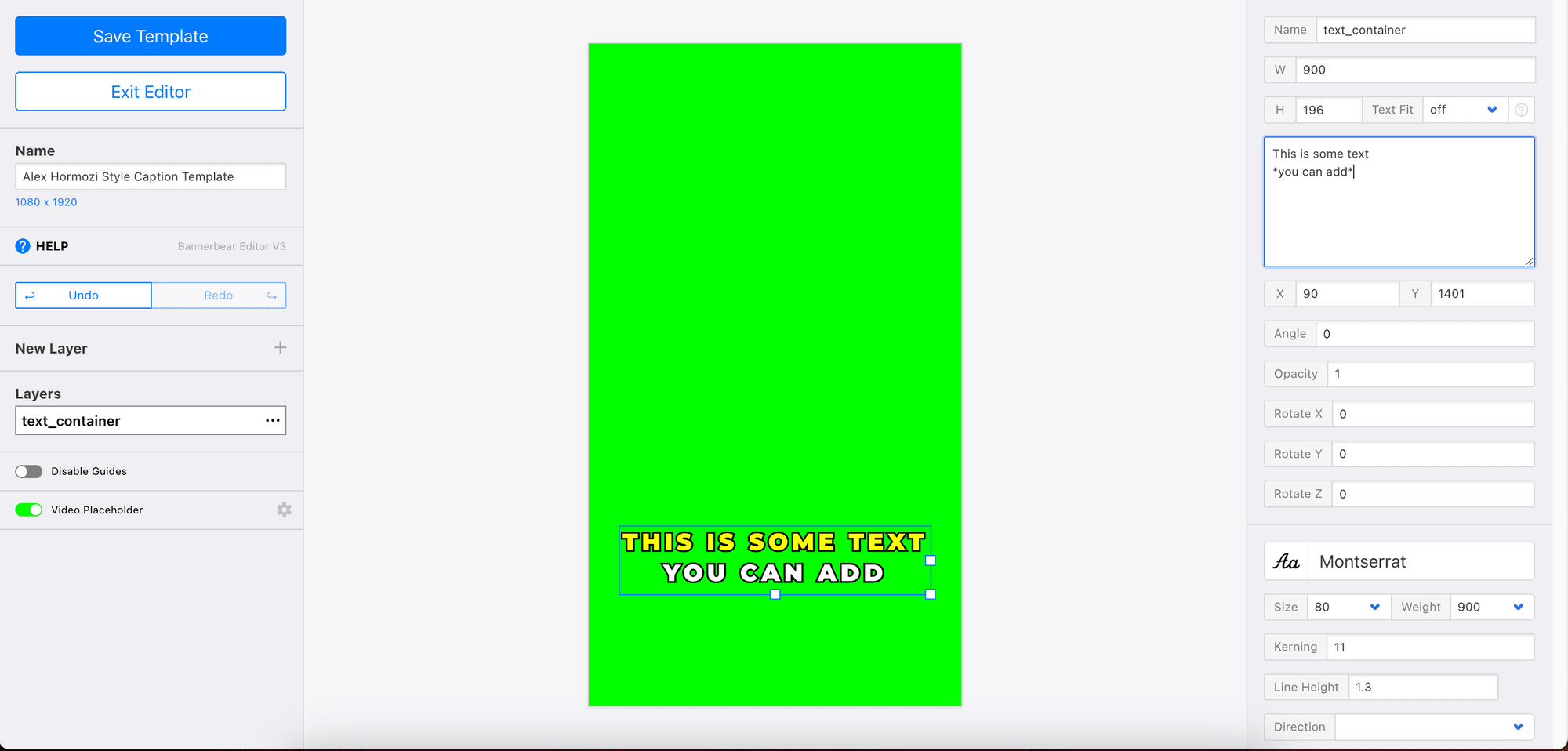

The subtitles’ font style and color can also be customized to make branded captions from Bannerbear’s template editor:

With a single API, you can transcribe a video and add branded captions to it automatically:

Bannerbear's auto-transcription is powered by Whisper and Google —you can choose either one when you create your template in Bannerbear. For more details, you can refer to these tutorials:

- Your 6-Minute Guide to Auto-transcriptions on Bannerbear

- How to Automatically Merge Video Clips, Voiceovers, and Subtitles with Bannerbear

Conclusion

With the Whisper API, you can easily integrate AI-powered transcription capabilities into your applications. If you are looking for a specialized transcription service for a specific industry or one that provides automation, other alternatives can be a good choice too.

As AI continues to evolve, we can expect even more advanced and user-friendly APIs which will further enhance our ability to create innovative and effective solutions!