Google Cloud Functions vs. AWS Lambda Test Results

Contents

Recently I ran some tests measuring performance and execution time of Google Cloud Functions against AWS Lambda. Here are my results which you may find useful.

Caveats

Before I get into the details I would like to heavily caveat with the usual disclaimer:

Your Mileage May Vary!

This article is not meant to be an attack on your personal technology choices, nor is it rigorously scientific. It is just a real world account of my experience with both services, and ultimately my decision to choose one over the other based on some tests.

The tests are highly specific to my use case, which may or may not be relevant to you, so I encourage you to view the test results through the lens of my use case - which I discuss in the next section.

Why migrate to serverless?

Bannerbear is an image generation API - you send a JSON data payload to my API and then Bannerbear generates images for you, based on templates that you have set up in the back end. Here's an example of 2 different images generated from one set of data including a title, a photo and a synopsis:

At its core, Bannerbear is a Ruby on Rails app running on Heroku. The image rendering itself is accomplished using headless browser screenshot functionality - in the past I have used both PhantomJS and Puppeteer for this purpose.

The Rails app manages incoming jobs and these are handed off to a microservice to perform the screenshot. Initially this microservice was running on a separate standard Heroku instance but I wanted to migrate this to a serverless function for these reasons:

- Speed / performance gains

- Never have to worry about concurrency

- Scale price with usage

- It seems like a logical architectural decision

What I mean by the latter point is, I like the idea of keeping my Rails app lightweight, acting as an expediter to more complex functionality such as Puppeteer or FFmpeg.

Speed is most important. From a service provider perspective the most important factors to me in this serverless migration were potential speed and concurrency gains. My ultimate goal is to be able to offer faster image rendering to my customers. Cost is not an immediate concern so it is not really explored as a benchmark below - again, YMMV.

A Weekend of Hell

This adventure begins with what I thought was quite a seamless migration to Google Cloud Functions. I created a new cloud function that simply spins up a Puppeteer instance, takes a screenshot, and then closes everything down. In terms of the instance execution time, it would take about 4 or 5 seconds to finish.

I basked in the majestic efficiency of it all, promoted it to my production level microservice, then stopped thinking about it and let it run. Occasionally I would look at the Google Cloud Function logs to check everything was running smoothly, which it was.

Until about 20 days later.

Right around the time I launched a new feature and started running some production tests on it, my Google Cloud Function started to get very upset.

The new feature meant that my cloud function would see increased concurrency, lets say around 10-20 concurrent requests.

No problem! Since the allure of serverless is that concurrency is no longer an issue, right? I might see some delay due to instance cold-starts etc but that would be on the order of single digit seconds, or so I thought…

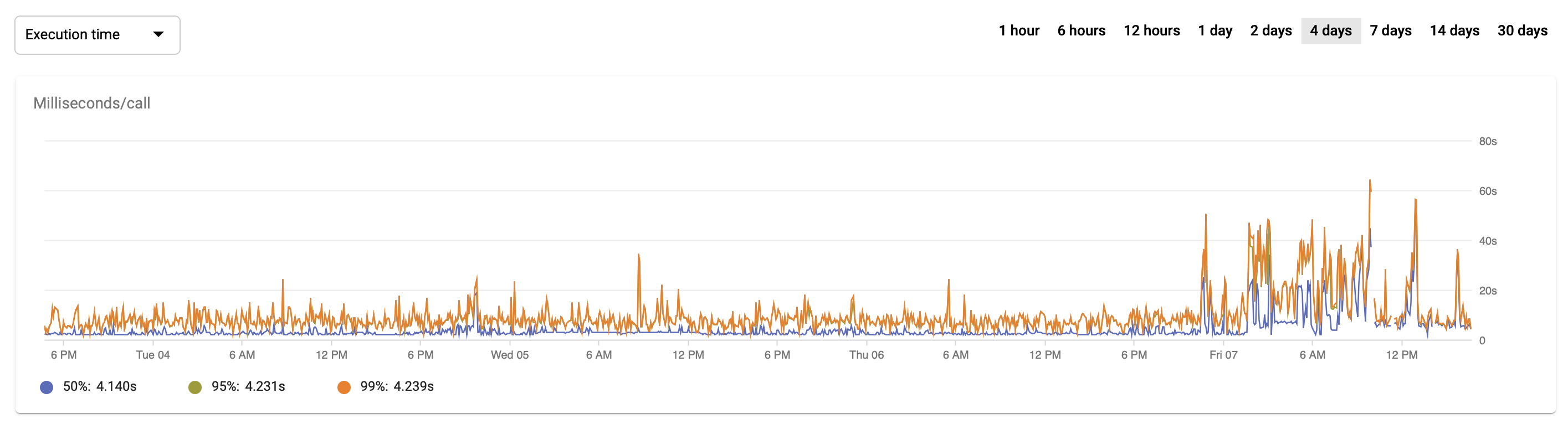

One fateful Friday, execution times started spiking, sometimes into 60 seconds. For a service that is supposed to render images in "just a few" seconds, that's uh… not good. Concurrency wasn't that much higher than before… so what's happening…?

Notice the relatively smooth area on the left? My Google Cloud Function had been running without complaint for weeks until this hiccup.

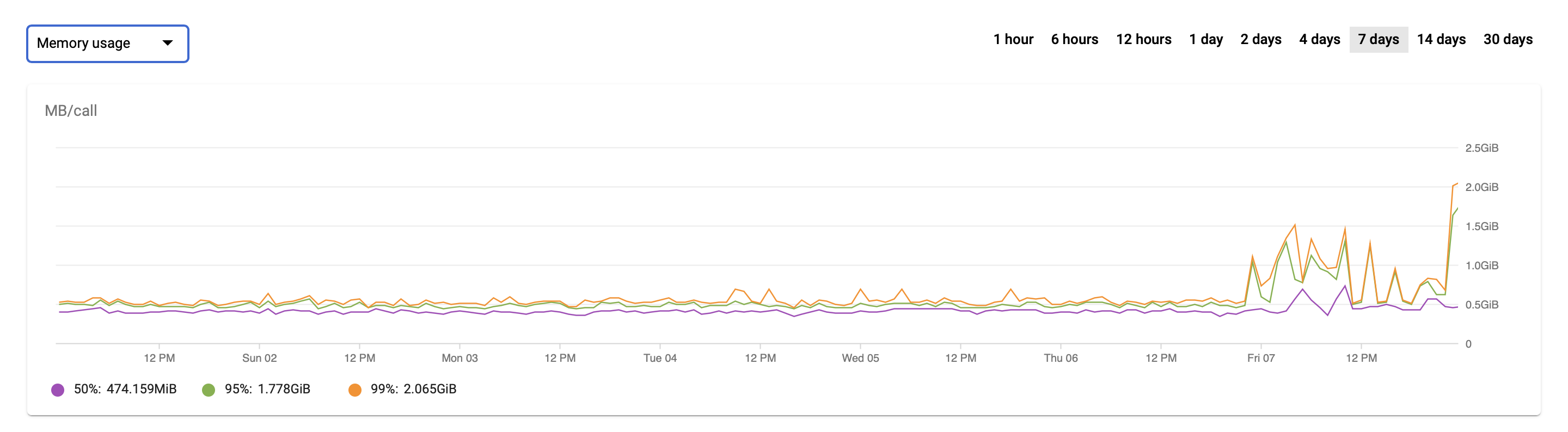

See also my memory usage going haywire:

So what changed?

To this day I still don't really know.

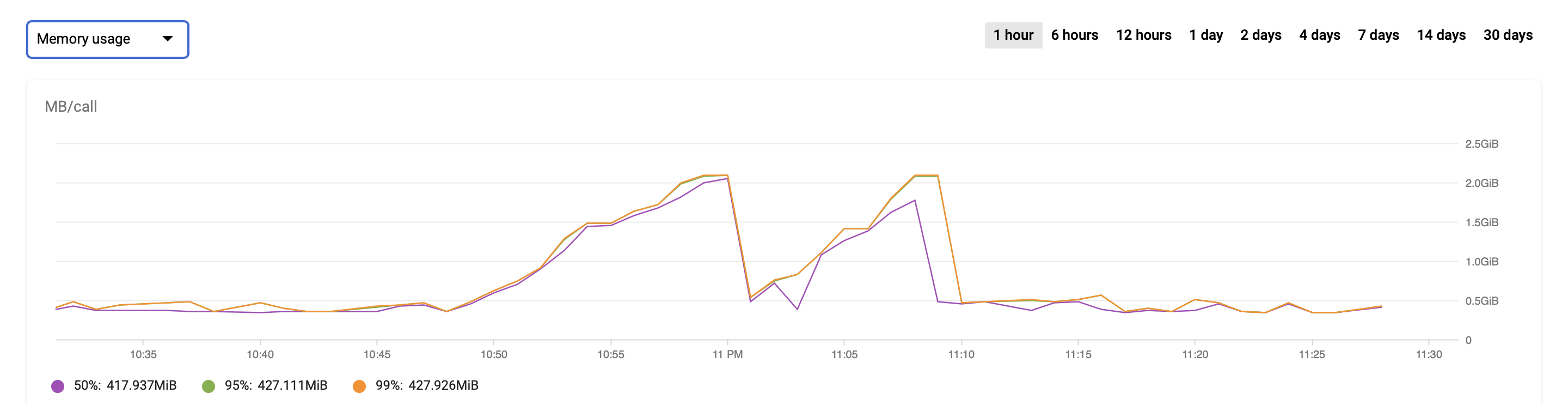

I spent the weekend exhausting all of the obvious, low-hanging fruit solutions such as; ensuring Puppeteer fully shuts down, clearing out temp files, provisioning enough memory, etc etc. But nothing seemed to work - the memory usage on my Google Cloud Function just seemed to display leak-esque behavior:

Saturday and Sunday were spent repeating this cycle: deploying a patch, watching memory usage / execution times inflate over the next hour or two, then triggering another deploy to "hotfix" the problem while I frantically tried to diagnose the issue.

After a couple of days things just seemed to settle back down to normal without further intervention, but by this time I felt betrayed. The whole promise of serverless was that I wouldn't have to sit there like some junior sysadmin hitting a restart button whenever things got dicey.

So by now I was looking to explore other solutions - I thought to myself perhaps GCF isn't stable? Perhaps its not suitable for my use case? Or, even if the problem was originally in my code (which I have now fixed via 1 of 10,000 frantic monkey-patches), perhaps other solutions could be more performant?

Test Goals

The other obvious choice when it comes to serverless functions is AWS Lambda. The learning curve here was considerably higher but I thought it would be an interesting test to pit the services against each other when performing the exact same function.

My goals were simple:

- Understand which service performs fastest

- Understand which service is more reliable

I would be looking at average image render time as an indicator of which is fastest. To determine reliability, I would look at things like the standard deviation of image render time, as well as HTTP response - you would expect a response 100% of the time but in reality it seems sometimes the services fail to even respond.

Running the Test

I ported my Google Cloud Function to an AWS Lambda function, which actually was simpler to do than I thought it would be. I used the Serverless.com framework to wrap a real deployment workflow around everything - something that I felt was missing with Google Cloud Functions.

With Serverless and AWS I could actually test my Lambda function locally / offline, deploy to development, deploy to production all from the CLI.

With Google Cloud you paste code into a text box and hope for the best. I'm slightly exaggerating as there are ways to push code from a Google code repository into deployment, but I didn't explore that option as the documentation seemed complicated.

Serverless.com + AWS gave me a Heroku-like workflow that I as a developer am much more familiar with; develop and test locally, deploy to remote via command line.

Both the Lambda and Google Cloud Function were given 2gb of memory to work with.

The Test Tasks

I would run two types of test.

The tests would hit an API endpoint, which would render a screenshot of a website and then return the image inline.

For the first test, to simulate real world conditions, the website would be randomised each time from a list of Bannerbear and non-Bannerbear sources.

For the second test I would use the same website each time. This is less indicative of real world performance but I thought it would be useful data to have.

I would run these tasks automatically every minute for a 24 hour period, adding in some random manual requests of my own of varying concurrency to add a chaos monkey element to the tests.

Here Comes a New Challenger!

To make the test a bit more interesting I thought I would benchmark against a 3rd party screenshot service that under the hood uses PhantomJS. There's quite a few of these, and they all do roughly the same thing so I don't think it's important to name the service. The point was not to measure the performance of the 3rd party, but simply to have another set of data to anchor the Google and Amazon data to.

So to recap, my tasks are:

- Hit an endpoint which loads a random website, renders as screenshot and returns

- Hit an endpoint which loads the same website, renders as screenshot and returns

And my endpoints:

- The original Google Cloud Function endpoint (using Puppeteer)

- A ported AWS Lambda endpoint (using Puppeteer)

- A 3rd Party PhantomJS endpoint

Monitoring Response Time

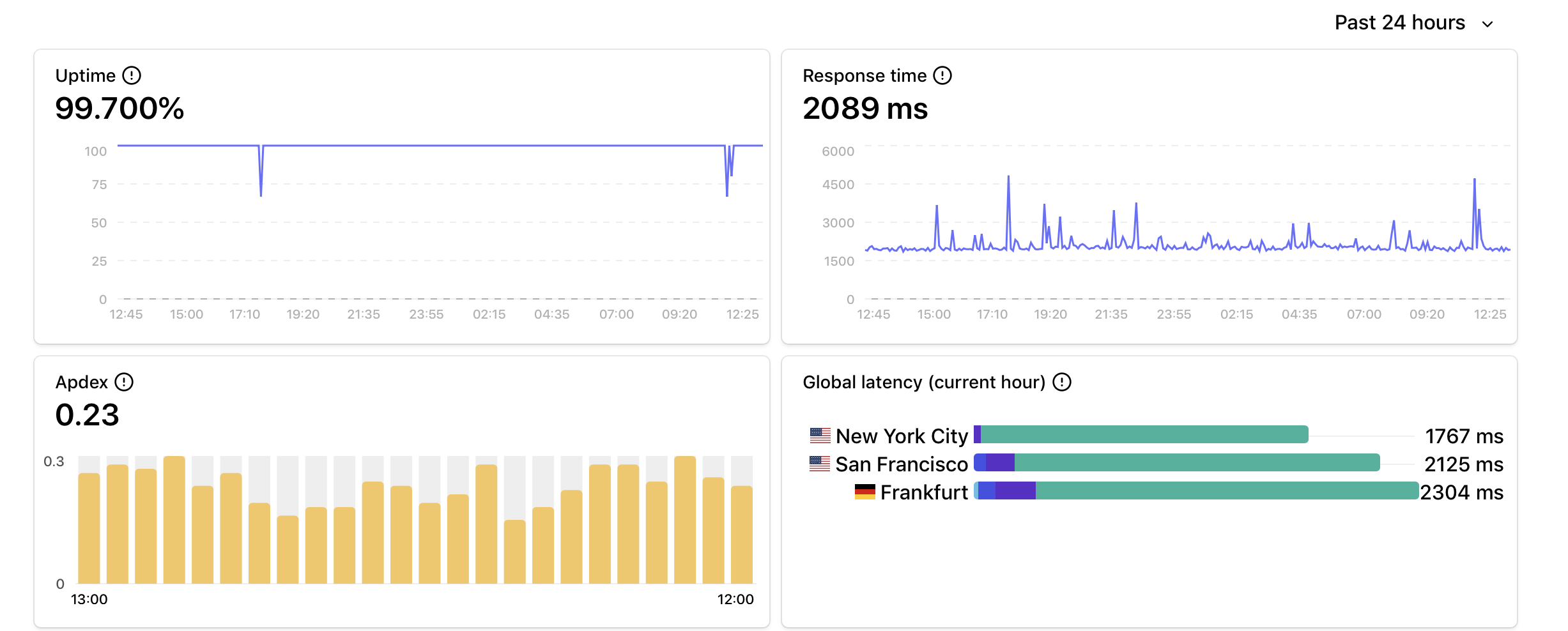

The metric I am measuring is response time.

That is, how fast does the service render the image and return it inline as the response.

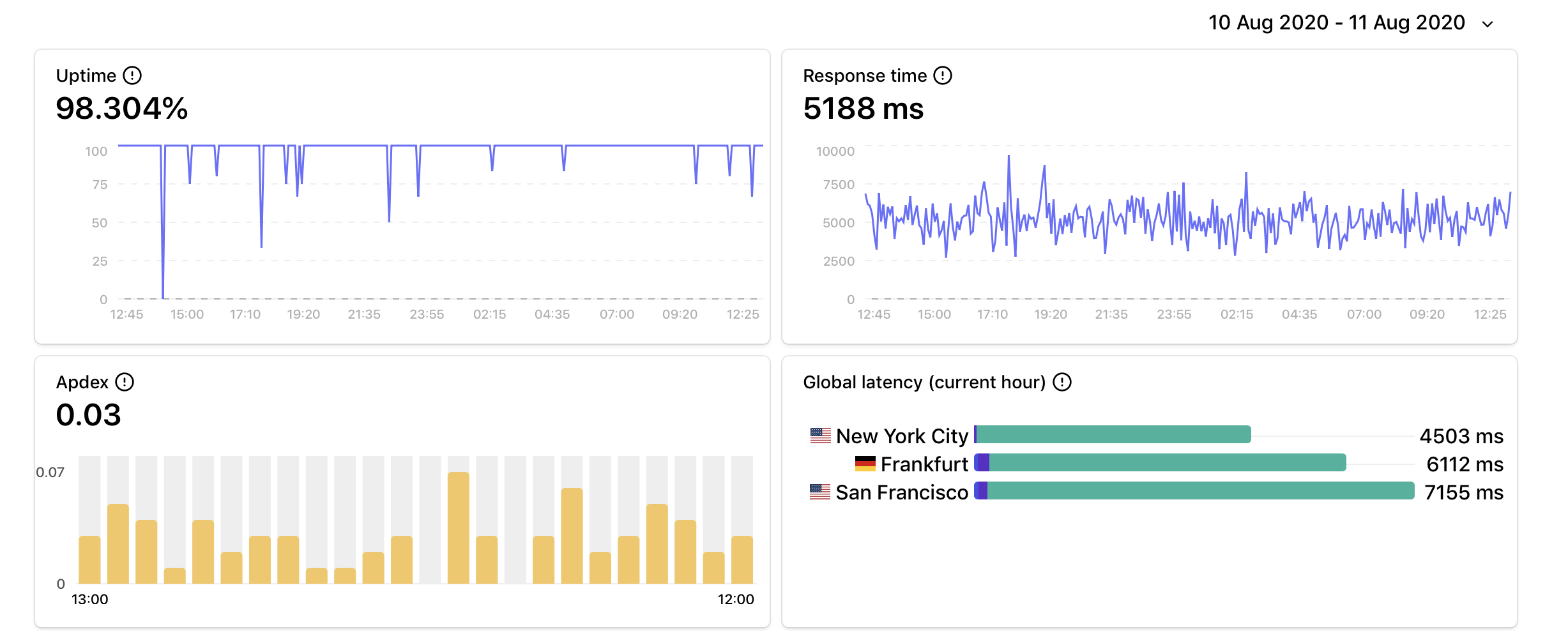

I signed up with monitoring service Hyperping in order to set up a monitor that pings my endpoints regularly, and records the response time in pretty charts for me to view.

Test Results

Randomized Website

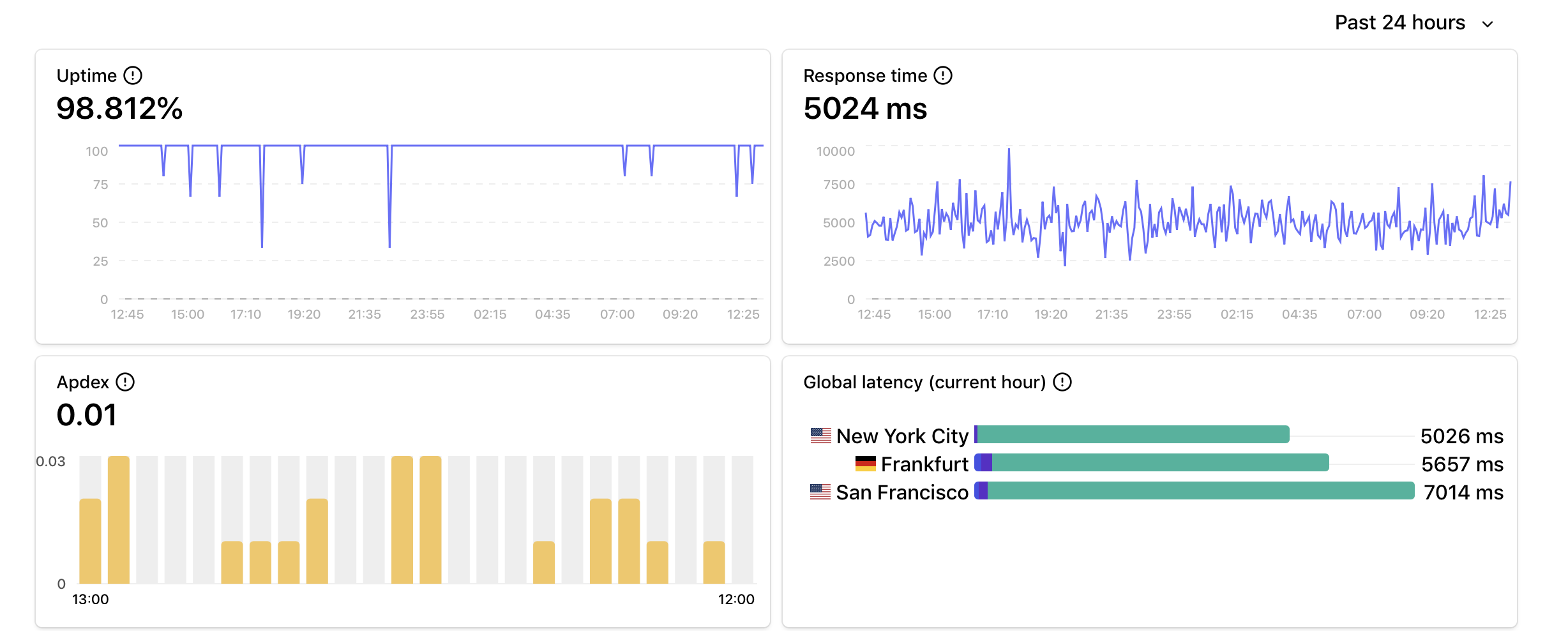

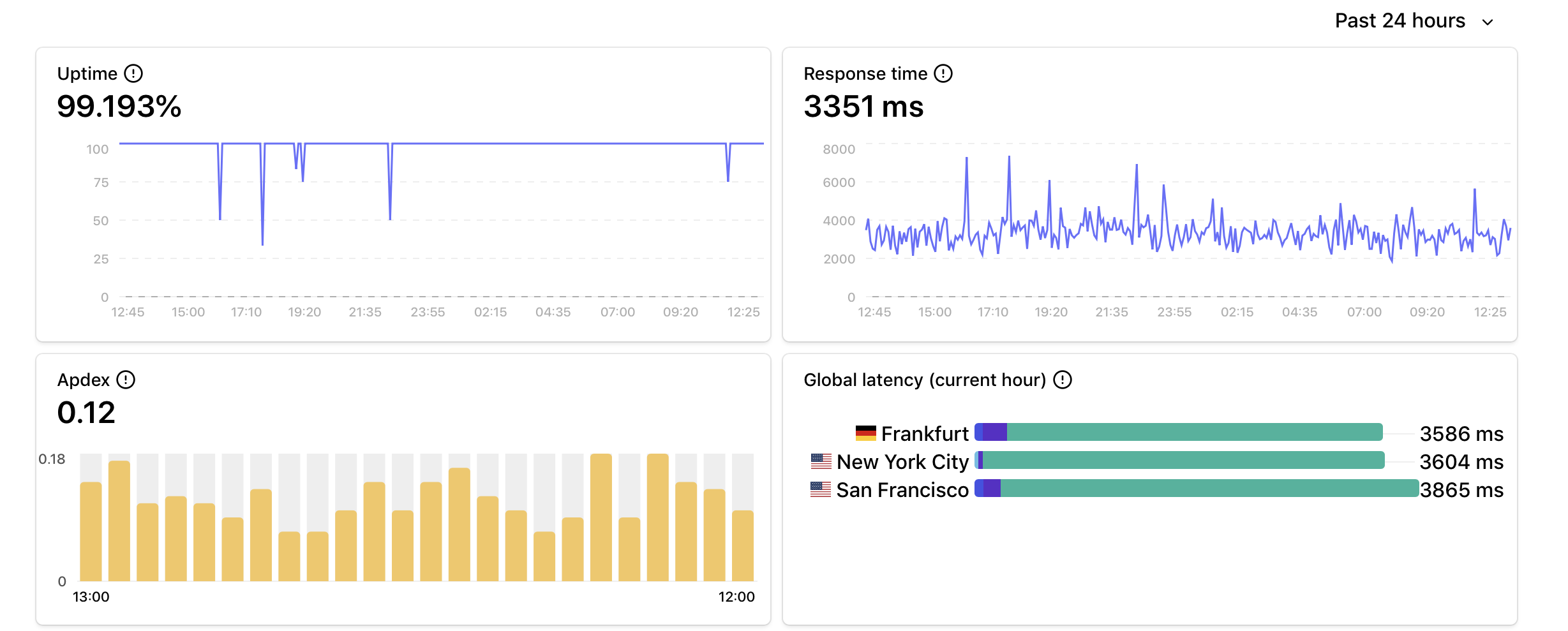

3rd party PhantomJS: 5188ms

Google Cloud Function: 5024ms

AWS Lambda: 3351ms

Benchmark Website

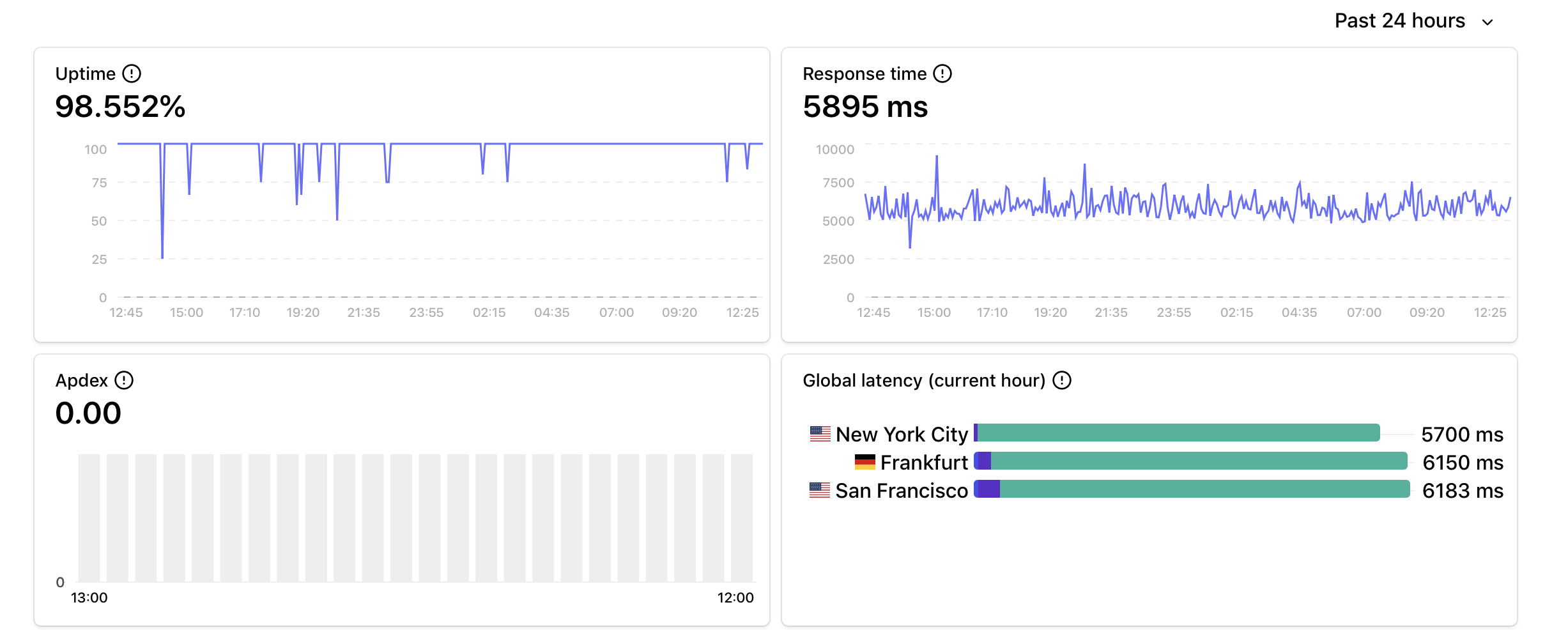

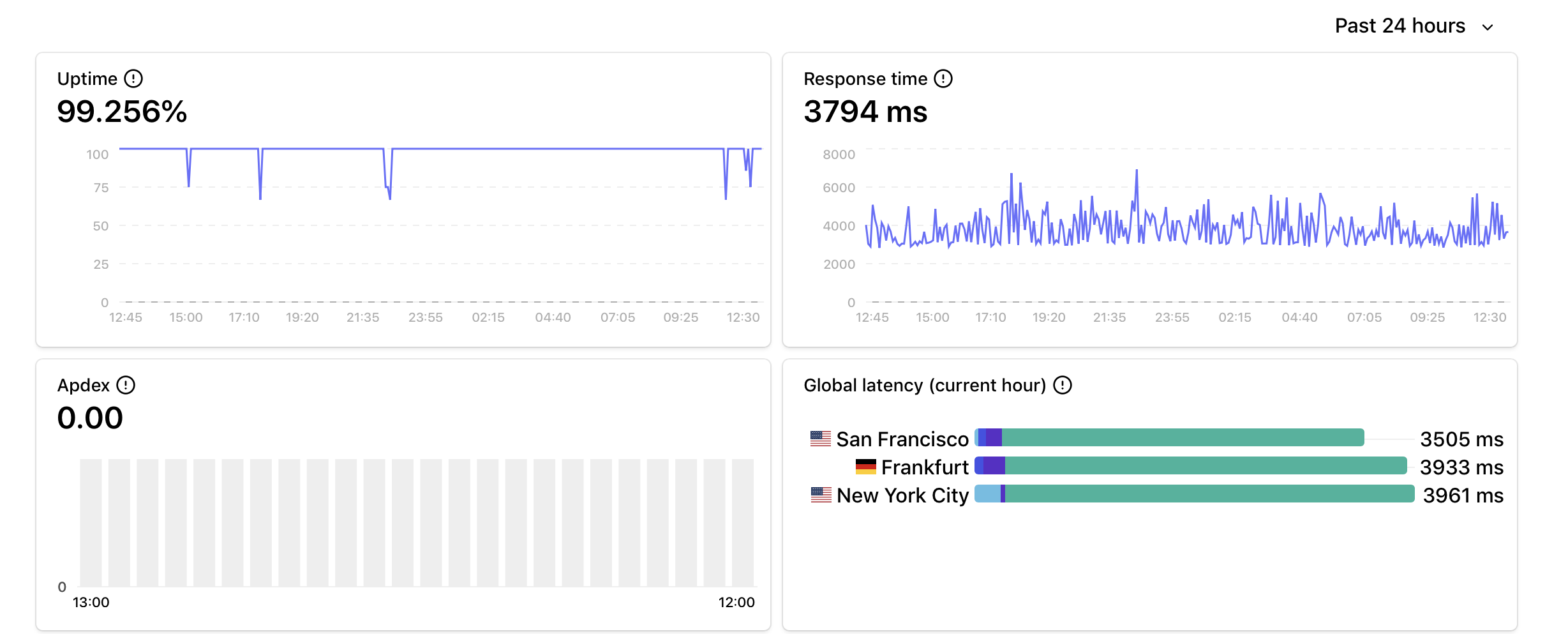

3rd party PhantomJS: 5895ms

Google Cloud Function: 3794ms

AWS Lambda: 2089ms

Conclusion

In terms of raw performance, AWS Lambda was the clear winner.

This alone was convincing enough for me to switch to using the Lambda full time.

Another benefit though (YMMV) was that the rest of my infrastructure is also on AWS, so I also see some network latency benefits when my app does things like take the returned image and store it to S3.

Overall, the end-to-end image rendering time measured from when a user requests, to the image being saved, to the JSON response is returned to the user, has been roughly cut in half by migrating from Google Cloud Functions to AWS. From an average of 10 seconds total to < 5 seconds.

Concurrent image rendering also performs much faster and so far I have been able to throw as many concurrent requests as I want at the Lambda without it breaking a sweat.

I hope this has been an interesting read but remember, your situation may be very different to mine depending on what you need to use serverless for and physically where the rest of your infrastructure is located. This article isn't meant to demonstrate that AWS Lambda is the best, just that it's the best choice for me :)

I'll round off with some pros and cons that I've observed in using these two technologies.

Google Cloud Functions: Pros and Cons

✓ Easy to get started, comes packaged with an HTTP endpoint

✓ Simple and clear monitoring tools

✗ Slower performance for my above task

AWS Lambda: Pros and Cons

✓ Faster performance for my above task

✓ Excellent monitoring tools with massive customization potential

✓ More familiar workflow via serverless.com

✗ Higher learning curve